The Gap Is Widening: 2025 AI Recap and 10 Predictions for What's Next (Part 1)

2025 was an incredible year of AI progress. I wanted to take the time to map out the changes that mattered this year, summarise the key trends, and take a punt on where things might land for 2026.

I've also asked my vibe-coded LLM council to provide its predictions for 2026, which I have included, which will be a bit of fun to track as we go into next year.

There is a bit to cover off, so we are going to do this in two parts - a 2025 recap in part 1 and 2026 predictions in part 2 here.

TL;DR

2025 in summary:

- Frontier AI experienced extraordinary intelligence gains across both closed-source and open-source ecosystems

- Competition intensified – OpenAI led most of the year, but Anthropic and Google made serious ground and overtook in some areas by year-end

- Open-source models now lag frontier capabilities by ~6 months, putting pressure on the frontier labs to stay ahead on model capability while building new AI products and platforms

- The world remained significantly compute-constrained where demand exceeds supply, with massive CapEx now flowing into data centres, which will come online later in 2026 and through 2027-2030.

- Consumer adoption exploded – ChatGPT reaching ~800 million weekly active users

- Data constraints are becoming the next bottleneck, with perhaps two major training runs left before we hit limits with the current approaches to model pre-training

Part 1: The 2025 Recap

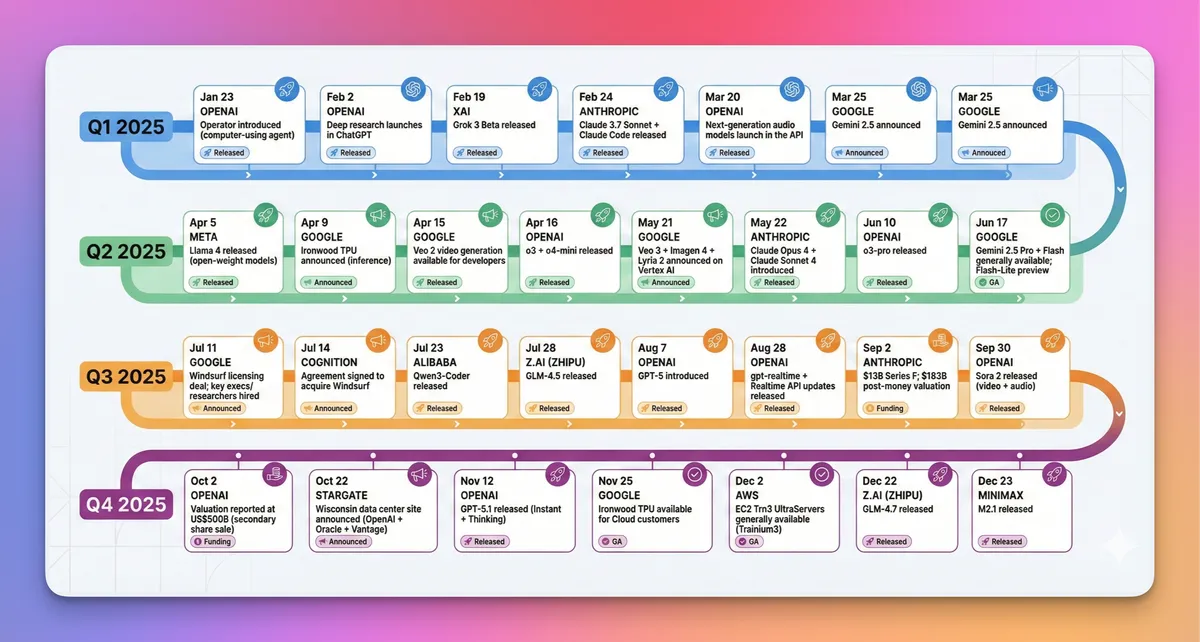

AI 2025 Timeline

AI 2025 Timeline

Intelligence Progress: A Year of Saturation

At the start of 2025, GPT-4-class models (notably GPT-4o) were predominant. By year-end, we've seen an explosion of capability:

- OpenAI shipped o1 Pro, o3, o3 Pro, GPT-5, and their thinking variants

- Anthropic delivered various Sonnet, Haiku and Opus releases, closing the year with Opus 4.5 – an exemplary coding model that works at speed

- Google released Gemini 3 Pro which shows moments of brilliance, and a range of model variants (including the Flash range). Google has consistently demonstrated an ability to move the cost/intelligence frontier across all models each time it brings it's AI products to market

Models at the start of the year could handle many simple creative and document-writing tasks, but had high hallucination rates and an inability to say "I don't know." As we close out 2025, almost all key intelligence benchmarks across coding, general knowledge, and graduate-level science, maths, and physics are saturating - and fast, with hallucination rates significantly lower and certain models starting to perform well on "I don't know responses" and safety protocols.

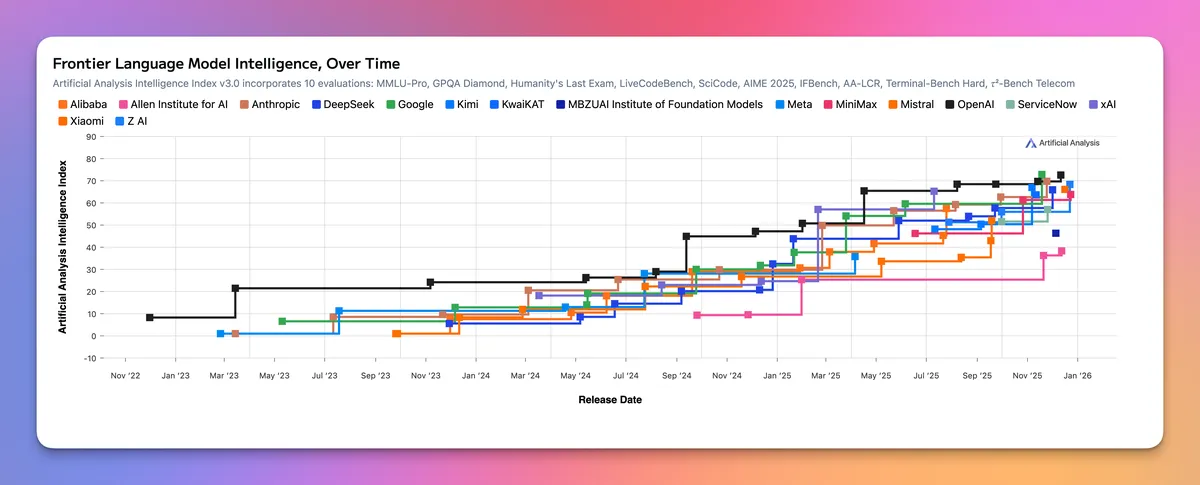

Artificial Analysis - Intelligence Index, Dec 2025

Artificial Analysis - Intelligence Index, Dec 2025

The real story was behind the benchmarks - models became dramatically more dependable when wrapped in proper harnesses: tools, eval loops, critique, tests, and supervision. This has enabled software engineers to shift from writing code to supervising agents that write it for them – focusing on outcomes over keystrokes, especially for commodity work across the most popular languages, systems and patterns.

The Competition Heats Up

OpenAI has led most of the year, but Anthropic and Google have made significant ground, if not surpassed OpenAI in some areas. Open-source options have proliferated and provide a compelling choice and price differential for those comfortable with these models' provenance.

The DeepSeek Moment (January 2025):

The year's first major shock came when Chinese startup DeepSeek released DeepSeek-R1 – an open model that DeepSeek claimed matched Western closed models at a fraction of the training cost (though analysts debated what that figure captured). It quickly ranked among the top models on major public leaderboards and proved the U.S. was "not as far ahead in AI" as assumed - a genuine "Sputnik moment" for the industry. DeepSeek has followed up with some compelling new versions and innovation on mathematics.

On the open-source side, Chinese and Asian model labs are now dominating:

- DeepSeek (R1 and V3)

- Kimi K2 (Moonshot AI's trillion-parameter open model – by late 2025 outperforming some U.S. models on key benchmarks)

- Qwen (Alibaba), GLM (Zhipu AI), and other Chinese labs

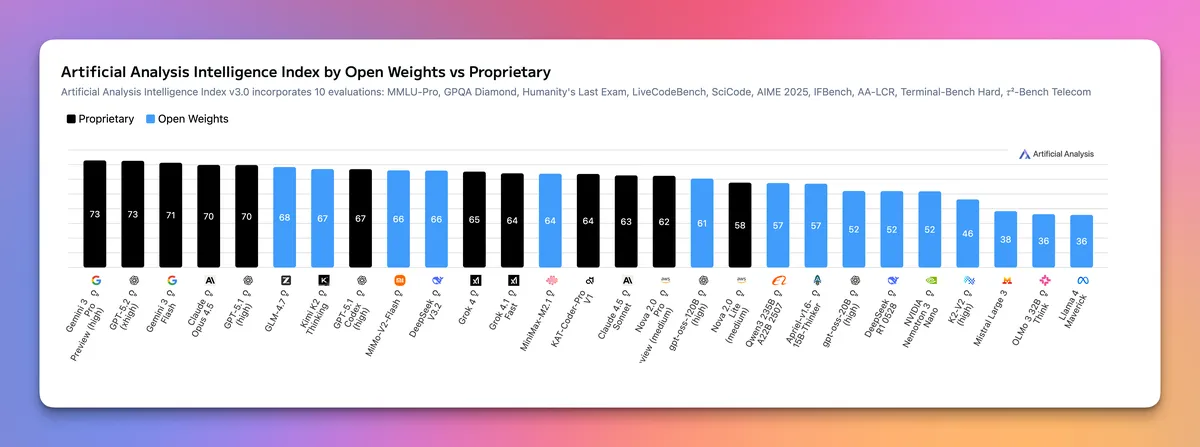

Artificial Analysis - Intelligence Index by Open Weights vs Proprietary, Dec '25

Artificial Analysis - Intelligence Index by Open Weights vs Proprietary, Dec '25

These models offer excellent general and coding capabilities and are rapidly advancing, putting competitive pressure on frontier labs to not just stay ahead, but to ensure their cost-to-value ratio makes sense when developers and enterprises have choice.

OpenAI Goes Open:

In a major pivot in August and going back to its roots, OpenAI released its own open-weight models (gpt-oss-120b / gpt-oss-20b) under Apache 2.0. This landed amid intensifying competition from open-weight models, including DeepSeek.

The Compute Crunch

Unbeknownst to most consumers, there are huge constraints on the ability of labs to access the latest chips and run cost-effective inference at scale. This is compounded by an insatiable demand for tokens by customers, which appears to be ever growing as new capabilities emerge.

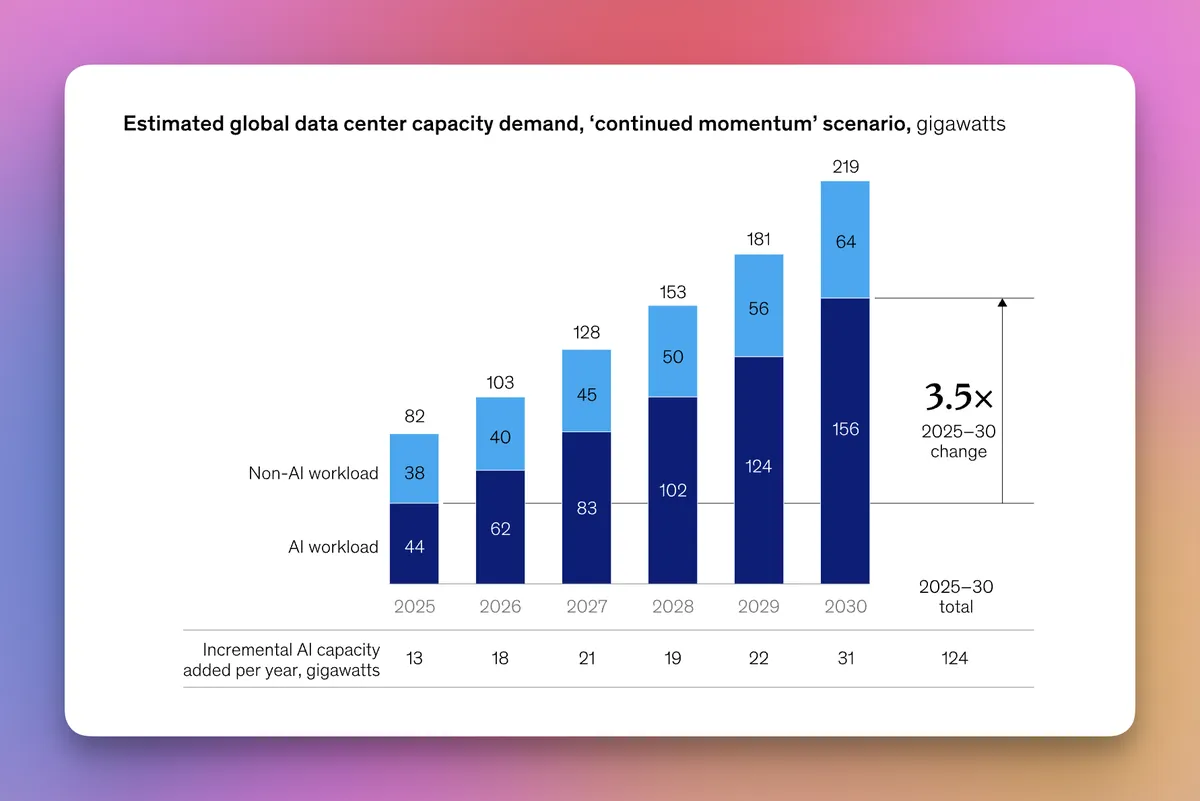

Mckinsey, The cost of compute: A $7 trillion race to scale data centers, April 2025

Mckinsey, The cost of compute: A $7 trillion race to scale data centers, April 2025

This compute crunch puts pressure on:

- Labs' ability to launch new products which require even more inference

- Balancing trade-offs across R&D and commercial needs in a highly competitive market, where R&D is crucial to long-term success

We've seen a dramatic increase in CapEx allotments to data centres in 2025 - total AI data centre investment commitments approaching $1 trillion over the next five years:

- Project Stargate: On 21 January 2025, President Trump unveiled a $500 billion private-sector initiative with OpenAI, SoftBank, and Oracle. By September, they'd announced multiple data centre sites across Texas, New Mexico, Ohio, and Wisconsin – putting the project ahead of schedule with nearly 7 GW of planned capacity and over $400 billion in investment committed over the next three years.

- Anthropic went multi-cloud at scale - a blockbuster Google Cloud deal for up to 1 million TPUs (~1 GW of compute by 2026), plus a deal with Microsoft and NVIDIA announced in November: a $30 billion Azure compute commitment and up to $15 billion in investment. The company also unveiled a $50 billion data centre investment plan.

- Meta and xAI are building some of the largest AI clusters on the planet. Meta committed $65 billion to data centre expansion, including a "Hyperion" supercluster targeting 2 GW by 2030, scaling to 5 GW. xAI's "Colossus 2" in Memphis is on track to become the world's first gigawatt-scale AI data centre.

- NVIDIA hit $5 trillion valuation by October – the first company ever to reach that milestone

Power availability is also not evenly distributed – China's grid has significant spare capacity and can connect new demand faster than most Western markets. Memory challenges (SRAM and DRAM supply chain constraints) are also becoming a bottleneck on data centre growth.

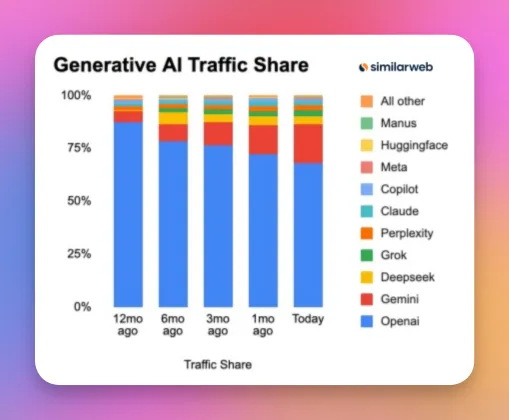

Consumer and Enterprise Adoption

ChatGPT has reached ~800 million weekly active users – up from zero just three years ago, making it one of the top 10 most visited websites globally. Both OpenAI and Anthropic have made major gains in the enterprise space - their enterprise app offerings and API spaces have been major drivers of growth.

SimilarWeb, Dec '25

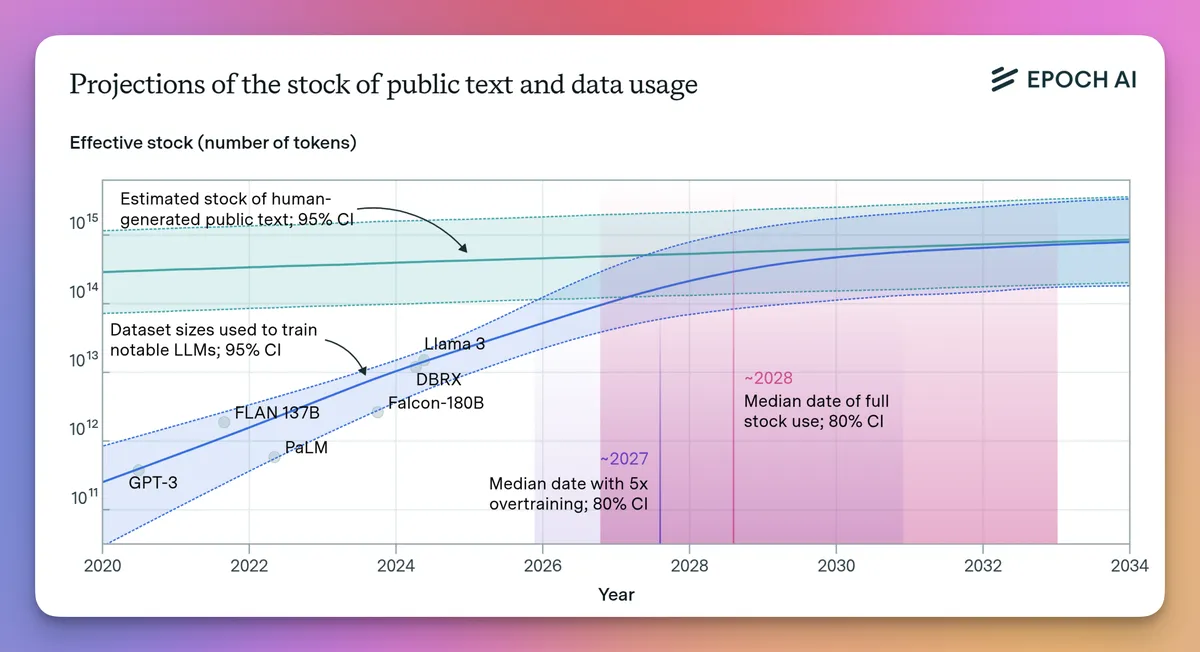

The Data Wall

One big trend is the data constraint powering model development. By some estimates, there is enough data to support two major training runs over the next 18–24 months across the major model labs. Without major breakthroughs in the interim, we won't know how model progress will continue.

Epoch AI - Projections of the stock of public text and data usage, 2024

Epoch AI - Projections of the stock of public text and data usage, 2024

This still represents a major order-of-magnitude improvement on core model capabilities today. Take coding – at the start of the year, models could barely code. By year-end 2025, models like Claude Opus 4.5 and OpenAI's GPT-5.1-Codex-Max - when properly harnessed with tools, evals, and supervision - can now sustain autonomous coding runs of 24 hours or more, shipping production-grade code with minimal human intervention. A further order-of-magnitude improvement from today is very hard to imagine.

Multimodal Progress

We've seen continued advancement in multimodal models – video, speech-to-text, text-to-speech, music / sound and image generation. We are still very early on the development of these capabilities. Video and early research in gaming and world model simulations show huge promise as we go into 2026.

On the R&D front, we have never had so many research and engineering professionals all focused on AI at the same time. NeurIPS 2025 received a record ~21,500 paper submissions. Promising research vectors are emerging across memory, recursive learning, and new model architectures.

AI Achieves Scientific Milestones

In July, AI achieved gold-medal standard on IMO problems (in evaluation) – marking the first time AI approached the level of the world's top teenage mathematicians. The model labs have now turned their attention to the sciences with a number of developments:

- GPT-5 helped researchers progress on a decades-old Erdős problem in mathematics

- OpenAI + Retro Biosciences achieved a 50x increase in expressing stem cell reprogramming markers

- GPT-5 optimised a molecular cloning protocol by 79x – a task requiring wet lab expertise

- Google DeepMind released what Nature called a "spectacular general-purpose science AI"

- DeepMind's Weather Lab launched in partnership with the U.S. National Hurricane Center

- WeatherNext 2 – DeepMind's most advanced weather forecasting model was released in November

What Models Can Do Now

Models today can execute a wide range of tasks they couldn't at the start of 2025:

- Autonomous coding sessions up to 24+ hours in some cases, shipping production-grade code

- Multi-step reasoning with adaptive "thinking" modes

- Complex financial modelling, scenario planning, and graduate-level problem solving

- Deep research – synthesising hundreds of sources into structured reports

- Processing large documents and building complex spreadsheets

- Direct computer control – browsers, forms, multi-step workflows (e.g. Claude's Computer Use and OpenAI Agent - both still in nascent form)

- Agentic tool use – chaining APIs, databases, and tools autonomously

- Long-form content, near-photorealistic images, and short video clips (Sora 2, Veo 3)

- Processing up to ~3 hours of video in a single context window (e.g. Gemini 2.5)

- Real-time voice conversations with low latency and emotional nuance

- World models like DeepMind's Genie 3 generating interactive 3D environments from text prompts – enabling unlimited simulated training grounds for robotics and AI agents

Even if no new models emerged in 2026, what exists today—properly harnessed with the right products and workflows—can already do extraordinary things. There are months, if not years, of untapped potential in the current technology alone. Contact centres, digital channels, marketing, back-office processes, software development—the applications already are vast.

Enterprise Adoption - Surging But Still Early

In 2024, 78% of organisations reported using AI in some form (up from 55% in 2023), according to the Stanford AI Index 2025. Microsoft's CEO revealed that 20-30% of all code at Microsoft is now written by AI assistants. We're also starting to see credible pilots of end-to-end automation – AI systems completing tasks autonomously rather than merely suggesting or editing human work – though augmentation remains the dominant pattern today. Enterprises are still at the very start of their adoption curve.

Regulation: Moving from Principles to Implementation

Governments have shifted from a light touch to active implementation:

- The EU AI Act began phased implementation in 2025, with high-risk provisions taking effect from August 2026

- The U.S. regulatory approach flipped with the Trump administration – revoking the prior AI executive order and replacing it with an "AI Action Plan" focused on outcompeting China

- China implemented its 2023 Generative AI regulations, requiring all services to register with the government and implement censorship and watermarking. In September 2025, new AI Content Labeling Measures took effect, mandating that all AI-generated content carry visible labels and embedded metadata – and draft AI Ethics Management rules now require companies to establish ethics committees for AI project review

- The Paris AI Action Summit (February 2025) brought together governments, companies, and researchers; meanwhile, the International AI Safety Report (January 2025) – backed by 30 countries – provided the first consensus scientific assessment of frontier AI risks

The full risks and benefits are not yet clear to governments and the average consumer, with much of the regulatory response preparatory in nature.

Closing Thoughts

2025 marked the moment AI shifted from impressive demos to a durable, general-purpose capability layer. Intelligence gains saturated the benchmarks. Compute and data constraints emerged as the new bottlenecks. And enterprise adoption—while surging—remains at the very start of its curve.

The gap is widening. Not between those who use AI and those who don't, but between those who redesign how work gets done and those still experimenting at the surface.

We don't need to wait for new models. Years of productivity, creativity, and competitive advantage are already locked up in what exists today. The question heading into 2026 isn't whether AI will matter—it's whether you're positioned to compound alongside it.

In Part 2, I'll share my 10 predictions for where the biggest shifts will land in 2026 —from agentic workflows and job displacement to the IPOs that will define the next chapter.

Sample Sources & References

2025 Developments

Frontier Models & Labs:

- Introducing GPT-5 – OpenAI

- Claude Sonnet 4.5 – Anthropic

- Gemini 3 – Google DeepMind

- OpenAI launches two 'open' AI reasoning models – TechCrunch

Open Source & China:

- China's cheap, open AI model DeepSeek thrills scientists – Nature

- The Chinese finance whizz whose DeepSeek AI model stunned the world – Nature

- China's Moonshot AI Releases Trillion Parameter Model Kimi K2 – HPC Wire

- 'Another DeepSeek moment': Chinese AI model Kimi K2 stirs excitement – Nature

Compute & Infrastructure:

- NVIDIA hits $5 trillion valuation as AI boom powers meteoric rise – Reuters

- AI Developments That Reshaped 2025 (Project Stargate) – TIME

Data Constraints:

Scientific Breakthroughs:

- DeepMind and OpenAI models solve maths problems at level of top students – Nature

- AI Took on the Math Olympiad—But Mathematicians Aren't Impressed – Scientific American

- Gemini with Deep Think achieves gold medal standard at IMO – Google DeepMind

- Early experiments in accelerating science with GPT-5 – OpenAI

- WeatherNext 2: DeepMind's most advanced weather forecasting model – Google

- Supporting better tropical cyclone prediction with AI – Google DeepMind

- Genie 3: A new frontier for world models – Google DeepMind

Robotics & Multimodal:

- Gemini Robotics – Google DeepMind

- Gemini Robotics 1.5 brings AI agents into the physical world – DeepMind

Adoption & Enterprise:

- The 2025 AI Index Report – Stanford HAI

- The Year AI Went Fully Mainstream: A Complete 2025 Recap – Scalevise

Regulation & Policy: